A Strategic Framework for World Leaders

By Joe Merrill

Artificial intelligence is entering its infrastructure phase. Today’s global market favors large foundation models, hyperscale data centers, and centralized compute. That preference is rational given current benchmarks and capital flows.

However, it is incomplete.

The next phase of AI competition will not be determined solely by model size. It will be determined by ease of use, orchestration, energy efficiency, and control of inference across distributed systems for higher fidelity at global scale.

Large models will remain essential. But they will not remain dominant for every task. Nations and enterprises that assume scale alone guarantees superiority risk overinvesting in centralized infrastructure while underinvesting in distributed intelligence that is easy to use.

This outline does not argue against frontier models.

It argues that frontier models are not the final architecture.

I. The Current Market Logic

The present AI economy rests on three defensible assumptions:

- Larger models achieve higher general performance.

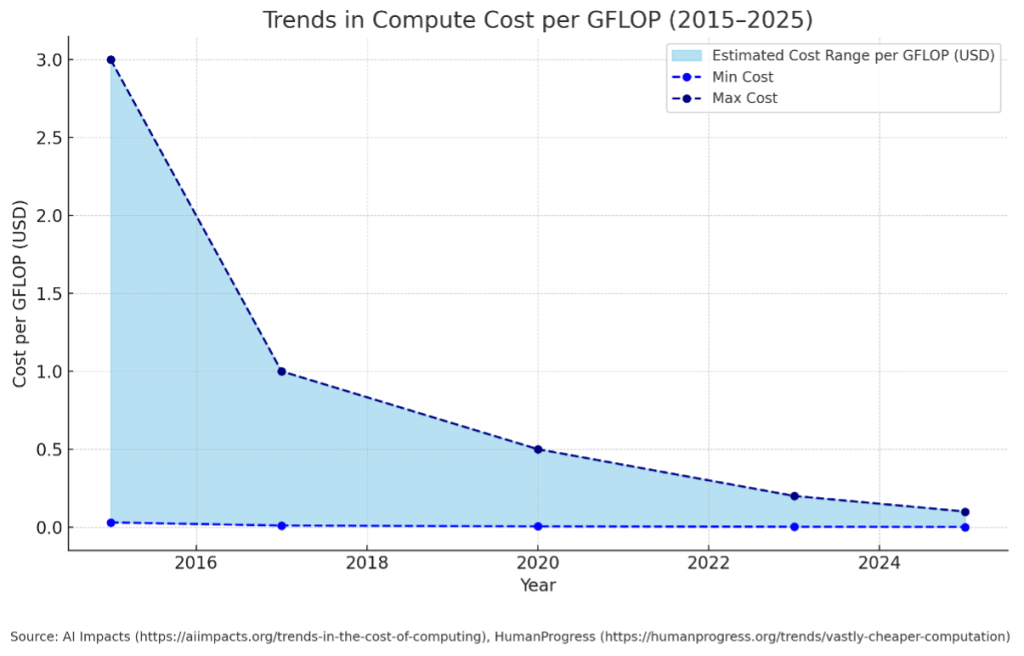

- Hyperscale infrastructure reduces marginal compute cost.

- Centralization simplifies governance and security.

These assumptions are supported by scaling laws and benchmark performance. Frontier models demonstrate remarkable cross-domain capability.

But general capability is not the same as economic optimality and high fidelity.

Most enterprise and governmental AI use cases are bounded:

- Fraud detection

- Logistics optimization

- Energy forecasting

- Compliance automation

- Defense modeling

These tasks require precision within domain constraints. They do not require universal reasoning engines.

The central question is no longer:

Who can train the largest model?

It is:

Who can deliver optimal inference at sustainable cost and sovereign control?

II. The Immutable Law of Tensor Data

The Immutable Law of Tensor Data:

For any AI inference problem, there exists an optimal quantity and structure of information required for accurate tensor inference. Data beyond this threshold introduces inefficiency, noise, or spurious correlation.

This is not a rejection of scaling laws.

It is a refinement of them.

Scaling improves generalization when the task is unknown.

But when the task is known, excess context can:

- Increase energy consumption

- Introduce irrelevant correlations

- Reduce interpretability

- Increase attack surface

- Inflate cost

The future advantage belongs to those who know:

- What information is necessary

- What information is irrelevant

- How to structure data for optimal tensor efficiency

The race is not for the largest model.

It is for the most efficient, high-fidelity signal.

III. The Role of Large Models in Future Architecture

Large models will remain critical for:

- Frontier reasoning

- Multimodal synthesis

- Model distillation

- Bootstrapping domain systems

They are analogous to supercomputers in the 1980s.

Essential. Specialized. Powerful.

But they will not be the operating layer of global AI.

Over time, most production workloads will shift toward:

- Smaller domain-optimized models

- Routed model architectures

- Edge inference systems

- Hybrid compute clusters

The winners will orchestrate across model sizes, not bet exclusively on one.

IV. Lessons from Distributed Computing

In the late twentieth century:

- Supercomputers built by Cray remained essential.

- But distributed personal computing, enabled by Microsoft and Intel, reshaped the global economy.

Centralized compute did not disappear.

It became one tier in a layered system.

The transformative shift came from:

- Standardized operating layers

- Modular hardware ecosystems

- Distributed deployment

- Lower barriers to participation

AI is approaching a similar inflection.

V. The Strategic Vulnerability of Hyperscale-Only Thinking

Hyperscale infrastructure carries real strengths:

- Economies of scale

- Rapid iteration cycles

- High hardware utilization

But it also introduces structural risks:

- Energy concentration

- Supply chain dependence

- Geopolitical leverage imbalances

- Latency bottlenecks

- Data sovereignty conflicts

- Single point of failure security

Centralization optimizes for providers.

Distributed orchestration optimizes for users and nations.

Resilience, cost and fidelity increasingly matters more than scale prestige.

VI. Orchestration as the Decisive Layer

The core strategic question is not large versus small models.

It is:

Who controls orchestration across heterogeneous models and distributed compute?

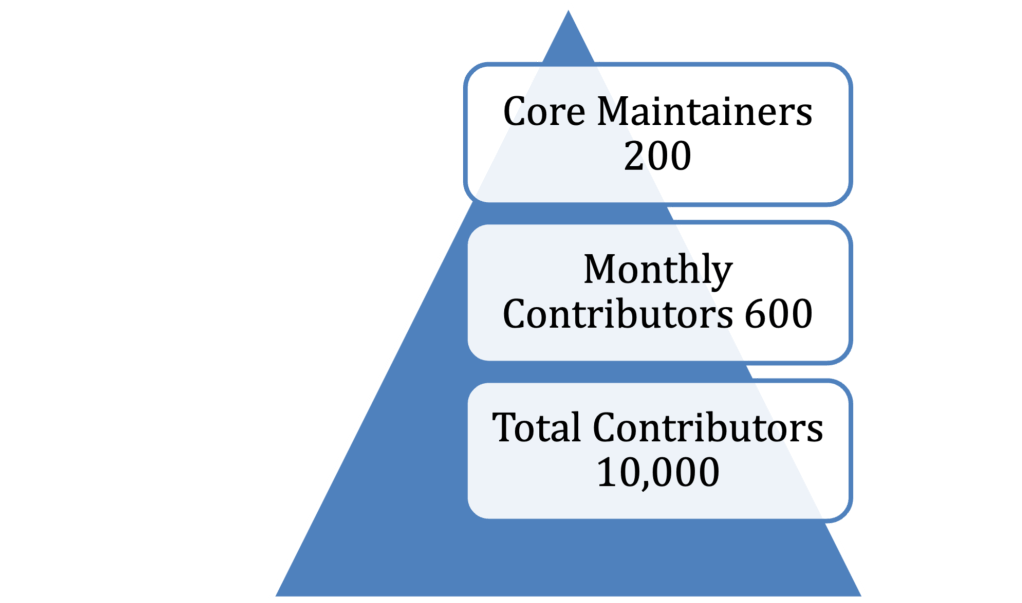

OpenTeams’ Nebari provides:

- Model lifecycle management

- Multi-model routing

- Sovereign deployment environments

- Distributed compute orchestration

- Integration across public, private, and edge systems

Nebari does not replace frontier models.

It integrates them.

Just as operating systems abstracted hardware complexity,

AI operating layers will abstract model heterogeneity.

The dominant power in the AI era will not be the largest model builder.

It will be the orchestrator of inference.

VII. Addressing Common Objections

“Frontier models outperform small models.”

True in general benchmarks.

False in most bounded enterprise contexts, research, and science.

Performance must be measured relative to task constraints and cost.

“Distributed systems are more complex.”

Without orchestration, yes.

With standardized orchestration, no.

Complexity migrates from the user to the platform.

“Hyperscalers can integrate orchestration themselves.”

They will attempt to.

But sovereign environments require:

- Cross-cloud interoperability

- On-premise integration

- Air-gapped deployments

- National control layers

No single hyperscaler can credibly serve every sovereign boundary without conflicts of interest.

The opportunity lies in neutral orchestration.

“Capital markets favor hyperscale.”

Markets often overconcentrate in infrastructure cycles.

Telecommunications, railroads, and fiber optics all experienced this pattern.

Distributed optimization historically follows central buildout.

VIII. Implications for Data Centers

Data centers will not disappear.

They will evolve toward:

- Regional clusters

- Energy-proportional scaling

- Edge compute augmentation

- Hybrid public-private orchestration

Instead of monolithic GPU concentration,

we will see federated compute networks.

Energy economics will drive this transition.

IX. Strategic Guidance for Nations

World leaders should pursue dual-track strategies:

- Maintain access to frontier model capability.

- Invest aggressively in distributed orchestration and domain models.

True sovereignty does not mean replicating hyperscalers.

It means controlling inference pathways, data governance, and energy exposure.

X. Conclusion

The current AI paradigm is not wrong.

It is incomplete.

Large models expand possibility.

Distributed orchestration secures sustainability.

Small models are the path to efficient fidelity.

The Immutable Law of Tensor Data reminds us:

Intelligence is not maximized by volume alone.

It is maximized by relevance and structure.

The coming era will reward those who:

- Optimize signal over scale

- Distribute rather than concentrate

- Orchestrate rather than centralize

- Build resilience rather than prestige

History does not eliminate centralized systems.

It absorbs them into distributed architectures.

AI is entering that phase now.

To learn more about Nebari, visit www.Nebari.dev